AMD × vLLM Semantic Router: Building Trustworthy, Evolvable Mixture-of-Models AI Infrastructure

AMD is a long-term technology partner for the vLLM community: from accelerating the vLLM engine on AMD GPUs and ROCm™ Software to now exploring the next layer of the stack with vLLM Semantic Router (VSR).

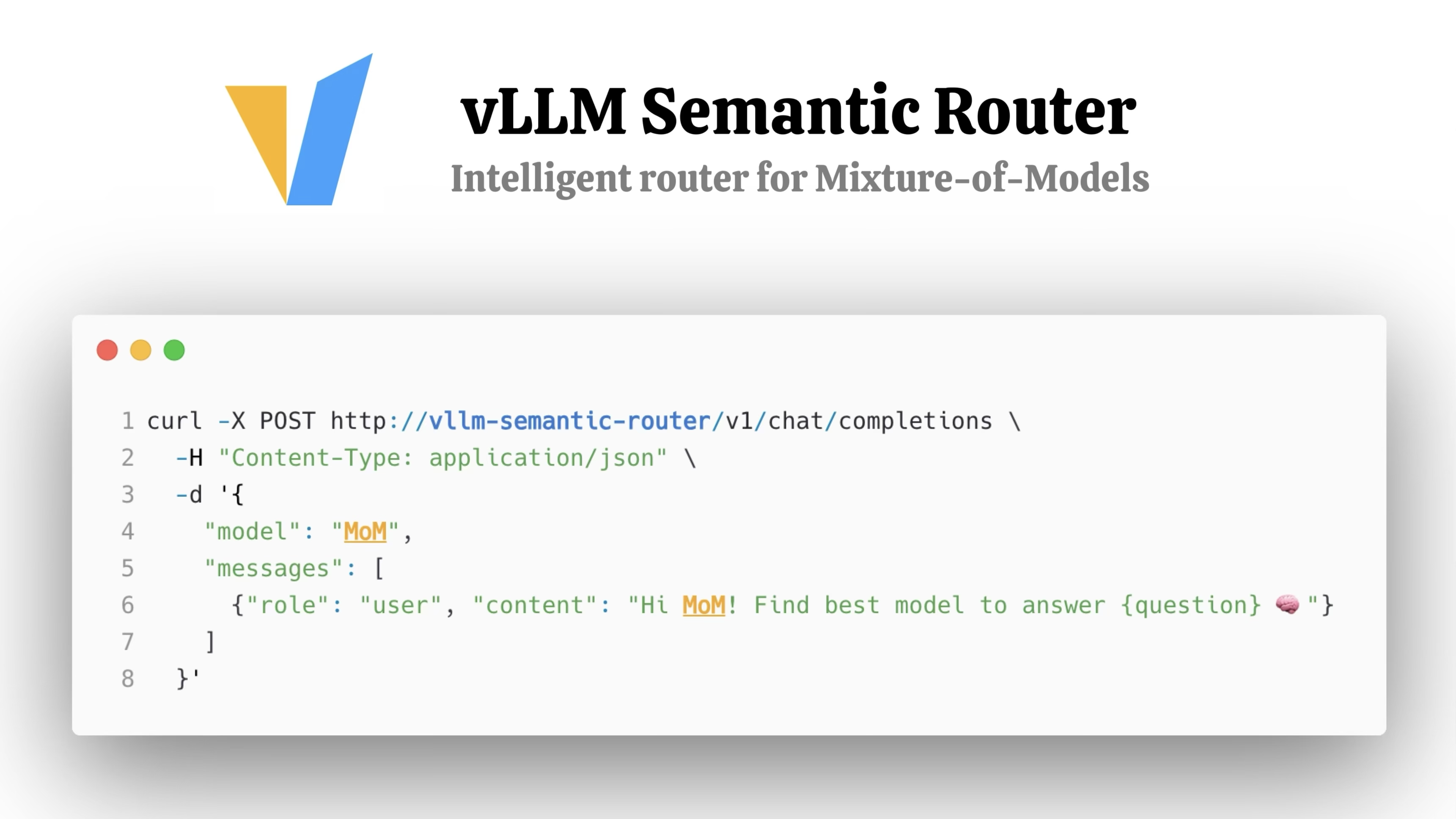

As AI moves from single models to Mixture-of-Models (MoM) architectures, the challenge shifts from "how big is your model" to how intelligently you orchestrate many models together.

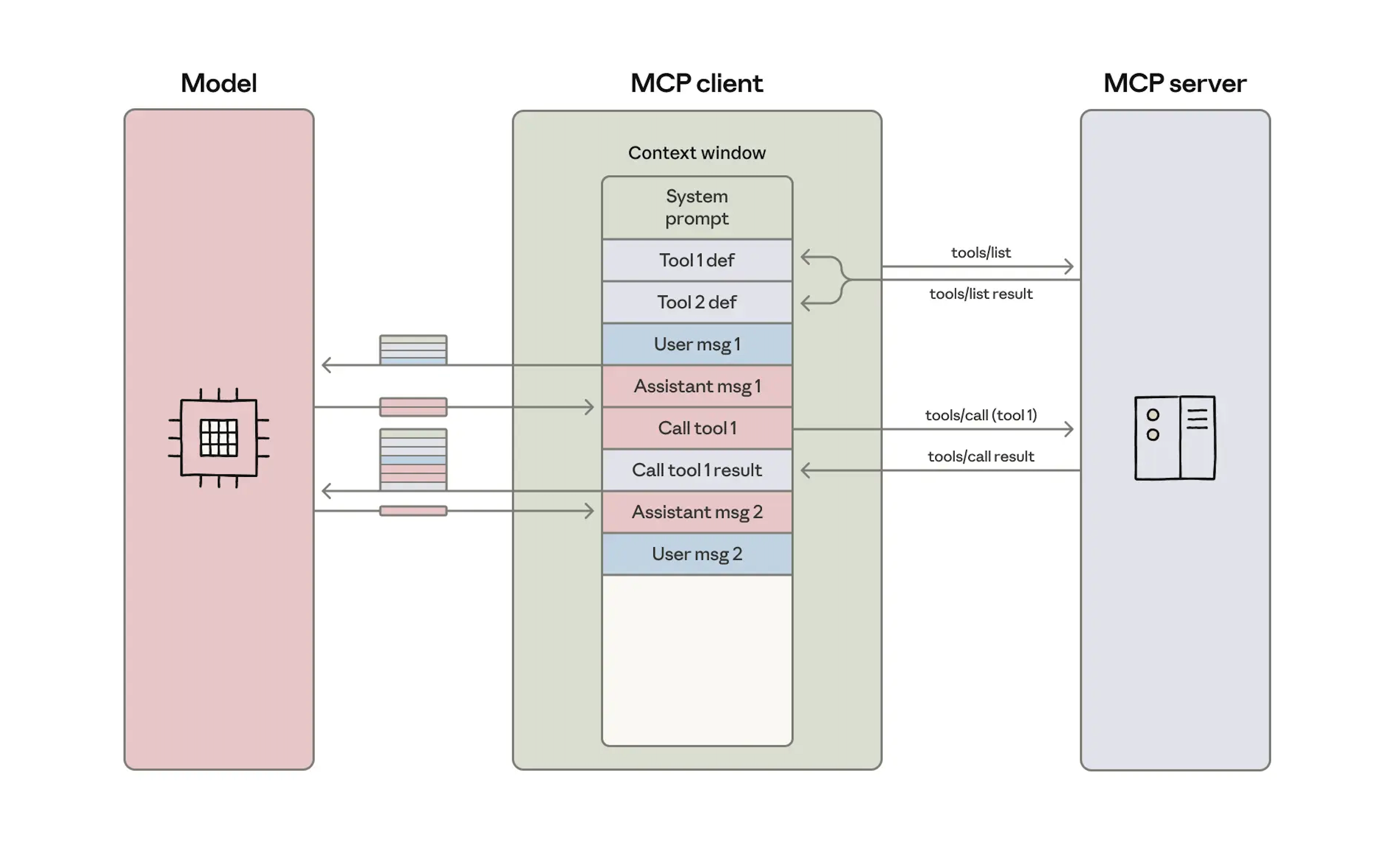

VSR is the intelligent brain for multi-model AI. Together with AMD, we are building this layer to be:

- System Intelligence – semantic understanding, intent classification, and dynamic decision-making.

- Trustworthy – hallucination-aware, risk-sensitive, and observable.

- Evolvable – continuously improving via data, feedback, and experimentation.

- Production-ready on AMD – from CI/CD → online playgrounds → large-scale production.